Introduction

As AI continues to be incorporated into business daily, the ability to know how to get the most out of language models becomes crucial. Perplexity Labs tracks a central metric called perplexity, a measure that evaluates AI language performance. It’s critical to the development of trustworthy AI business and marketing solutions in business. In this blog, we’ll look at what perplexity is, why it’s important, and how Perplexity Labs builds smarter, more impactful AI systems.

What is Perplexity in AI?

In the language modeling world, perplexity is among the most crucial and misinterpreted metrics. Perplexity determines how well the next word in a sequence can be predicted by an AI language model. For AI developers and business leaders, this provides a benchmark for the model’s understanding of language structure and meaning.

At Perplexity Labs, perplexity is not just a number, but a philosophy. We leverage it across the lifecycle to create AI systems that are not merely functional but strong and fluent across a variety of industries.

Here’s why it matters:

1. What Perplexity Tells Us

- It measures how “surprised” a model is by the correct output. Less surprise, more understanding of language.

- Lowering the value of the perplexity score means that the model has better knowledge of the syntax, grammar, and context.

- It’s a diagnostic tool to identify weak spots in the model’s training and tuning.

2. Why It Matters for AI Business:

- Business communication requires clarity and precision.

- Perplexity Labs measure perplexity to assess deployed language models used in customer service chatbots, internal knowledge bases, and report automation software.

- Models with low perplexity ensure lower chances of potential irrelevant or awkward phrasing that could harm customer experience or brand voice.

3. Supporting AI Marketing Solutions

- Marketing and messaging that are clear as well as compelling and tone-aligned.

- We fine-tune our models with perplexity scoring for various AI marketing solutions such as ad copywriting, blog generation, and social media content.

- Lowering perplexity means you can create content that strikes a confident, brand-consistent, and conversion-centric tone.

4. Powering Business Applications of Generative AI

- Perplexity is used to navigate the trade-off between creativity and herence in business-focused generative AI.

- It keeps AI-authored content current, whether it is reports, FAQs, or summaries.

- Perplexity Labs infuses perplexity with empirical testing to deliver smarter and more dependable outputs.

For us, confusion is in fact a beginning; a signpost that informs smart, usable AI for industries everywhere. That’s how we transform sophisticated language models into real, high-quality AI business solutions.

Also Read: Search Box Optimization

How Perplexity Labs Uses the Perplexity Scores?

Although many companies only use pre-trained models, Perplexity Labs is more personalized and performance-based. We apply perplexity scoring throughout the AI life cycle, starting with model training and culminating with production deployment, to reliably produce models with predictive accuracy and utility.

This measure also allows us to be more intelligent when identifying candidates for adjusting or enhancing models for certain industry use cases. No matter the goal, powering AI business solutions or refining AI marketing solutions, perplexity is a common quality.

This is what enables us to develop AI that is not just smart-sounding, but also fieldable in real-world situations.

Here’s how we use Perplexity Labs in practice and see if it works for you:

1. Training Evaluation

A low perplexity says the model is not only memorizing data, but it has learned to extract patterns and structure from the language. In training, we keep an eye on perplexities to detect underfitting or overfitting in time. This enables us to test how well our model performs out-of-domain, as it is essential for the long-term generalisation.

And when you begin to see that the perplexity is flattening or increasing through training time, you begin to think that it’s time to re-examine at dataset or re-examine the model architecture. This is early on, where you’re tuning to improve that baseline model later into production.

2. Use-Case Testing

Every business problem is unique, so we try multiple model versions across use cases and compare perplexity scores to discover the one performing well. For instance, an AI model to summarize insurance claims may need to be trained with slightly different nuances than for e-commerce customer support.

Surprise helps us decide what version we want of speed, relevance, and clarity for this particular situation. When comparing the capabilities of your models with AI business solution fit, it stands as a stable reference point. That’s how we know that what we deploy isn’t just usable, but fit-for-purpose.

3. Language Quality Control

Perplexity Labs evaluates AI language performance if we are comfortable with the outputs being coherent, natural, and aligned with the client’s brand voice. This process of puzzlement is vital.

We compare perplexity scores between sample outputs and optimize to minimize awkward phrasing and ambiguity. Particularly in AI marketing solutions, where tone and style can be everything, perplexity keeps content clear and sounding confident. Having low perplexity from day one, we minimize the post-editing time and increase trust in our generative AI systems from users.

Why Perplexity Labs Matters to Generative AI for Business?

When it comes to using generative AI in business, novelty is replaced by reliability as companies deploy it. AI is now more than just automation; it’s the key to mission-critical communications, customer engagement, content, and decision-making at businesses today.

Nevertheless, even state-of-the-art models can generate confusing, off-topic, and even incoherent outputs when complexity is not well-regulated at the levels of relevance. High perplexity scores may be interpreted as a model as poorly connected or disconnected from the intended context. Which in turn may damage trust, credibility, and user experience.

At Perplexity Labs, it is a readiness signal. It does so by informing if a model is generating outputs that are fluent, coherent, and confidently connected to the real world.

In business, this much is not simply important but crucial. Our researchers keep a close eye on and adjust the levels of perplexity both during training and during the development of the system to ensure that all the systems are able to speak, think, and perform consistently.

Here’s how businesses can use it across major areas:

1. AI Marketing Solutions

Language must be powerful, accurate, and relevant in AI-driven marketing technology. Perplexity scores improve the tone, message structure, and brand fit of AIGenerated content. Whether you’re authoring social media updates, product descriptions, or email campaigns, low-perplexity models are a source of clean, conversion-focused copy that necessitates far fewer post-hoc touch-ups and sounds like your brand. This means faster campaign deployments and better ROI.

2. AI Chat Interfaces

AI solutions in customer service need to read, write, and speak extemporaneously, empathetically, and contextually. High perplexity here may result in an irrelevant reply or disruption of the exchange. We evaluate our chat models heavily for perplexity, to ensure they provide useful, human-like responses in real-time. When someone asks questions, the models respond clearly, not in bursts of nonsense. All of this adds up to a more seamless customer experience and reduced support costs.

3. Internal Business Tools

We note the inverse applies to automatic generation tools of internal content (report summaries, meeting minutes, or email drafts) and similar. To be useful to businesses, generative AI in business needs to handle internal communication with aplomb and tone awareness. Lower perplexity corresponds to less surprising outputs, so people can trust and directly share them with minimal to no edits.

In all cases, Perplexity Labs makes the AI less robotic, more intuitive, and always business-ready.

Also Read: The Power of Quantitative Market Research

Problems Associated with Perplexity Labs Alone

But perplexity, however useful and widely used, is not without its problems. At the most basic level, perplexity is a measure of fluency; it tells us how confident an AI model is in predicting the next word. But a low perplexity score is not a sufficient condition for ensuring that the output is factually accurate, contextually appropriate, or ethical. In practical application, especially in a clandestine business environment, it is dangerous to only have faith in perplexity.

Perplexity Labs, however, knows real AI robustness is deeper than fluency. That’s why we rely on a multi-pronged evaluation strategy, in particular when

Launching AI business solutions and AI marketing solutions in an era where only tone, veracity, and significance matter.

Here’s why you need to support Perplexity Labs with other methods:

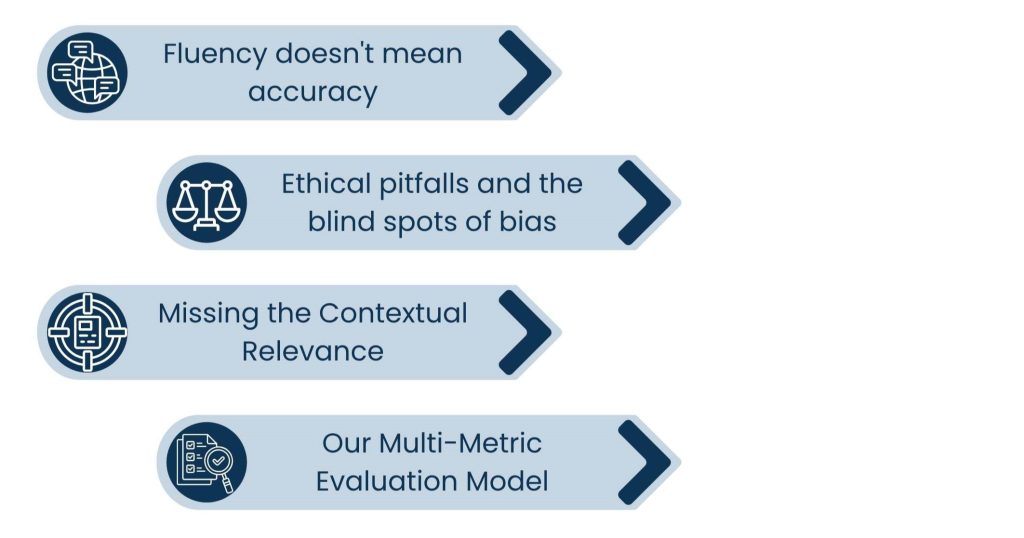

1. Fluency doesn’t mean accuracy

- An AI can produce sentences that are 100 percent accurate but also entirely wrong or misleading.

- In generative AI in business, for example, a low-perplexity output may contain stale data or miss a particular feature of a product.

- It’s an issue when AI-generated content is applied in investor decks, HR communications, or customer-facing documents.

- We, at Perplexity Labs, use human fact-checking combined with real-time data alignment to verify the outputs to avoid this trap.

2. Ethical pitfalls and the blind spots of bias

- Models trained on biased datasets can have high perplexity, but still generate harmful or discriminatory responses.

- This is particularly risky in AI tools that touch recruitment, customer service, or marketing.

- We use fairness and bias audits specifically in AI marketing solutions to ensure that content is sensitive to cultural, gender, and language diversity.

- Adding Perplexity with sentiment analysis & intent filters helps save from reputational damage to brands who are leveraging our solutions.

3. Missing the Contextual Relevance

- There can be a good perplexity model with responses that are not related and are either off-topic or unrelated to the user’s intention.

- In AI business solutions, such as chatbots or virtual assistants, it could cause user dissatisfaction and workflow hiccups.

- We at Perplexity Labs test intent alignment along with perplexity to make sure that the output is relevant, particularly for high-stakes or transactional conversations.

4. Our Multi-Metric Evaluation Model

- We also integrate perplexity with accuracy scoring, human review loops, quality benchmarks for domain competency, and online feedback collection.

- This two-tiered analysis is what enables us to apply generative AI in business that’s not just fluent, but valuable, responsible, and on brand.

- For us, perplexity is a signal, not a conclusion. When combined with human intelligence and contextual examination, it becomes an enormously potent component of an even broader trust framework.

What Is The Future of AI Language Evaluation at Perplexity Labs?

As language models get increasingly powerful and are incorporated into business processes, it’s our methods of evaluation and accountability that must shift. While perplexity has been a base-level measure, it only begins to address what businesses today require from their AI systems.

Perplexity Labs see the future of AI assessment as going beyond raw fluency and incorporating context, emotional intelligence, and user-centric impact. Our experience of delivering the very latest cutting-edge AI business solutions has taught us that success stems from a level of AI performance that matches the real-world expectations and not just model scores.

Here’s how we’re advancing the future of AI assessment:

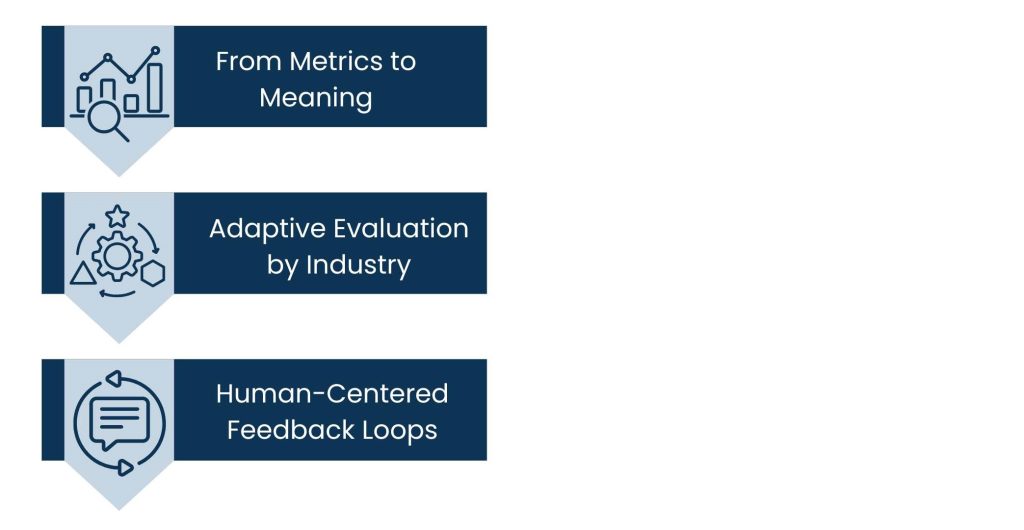

1. From Metrics to Meaning

- We are going from traditional scoring models to layered assessments, which include tone, coherence, and goal completion.

- Take, for instance, a generative AI in a business platform that surfaces summaries of internal reports, we don’t only consider the fluency of content, but also ensure it’s accurate, aligned with reporting standards, and contextual.

- Evaluation models now include qualitative checkpoints, where human reviewers are looking at nuance and consistency of language, particularly in industries like finance, legal, or health care.

- Our expanded criteria give a fuller sense of how the AI does, not just what it predicts.

2. Adaptive Evaluation by Industry

- Different sectors have different language styles, compliance rules, and tones.

- AI in marketing solutions, for example, focuses on convincing messaging that aligns with a client’s brand. Our review models check not only for how readable reviews are but also for emotional tone and cast the conversion alignment.

- By contrast, AI business applications in compliance or support systems demand well-structured responses, as little ambiguity as possible, and policy-safe language.

- We’re creating adaptive scoring models that compare the performance of AI systems to the norms and KPIs of the industries they serve.

3. Human-Centered Feedback Loops

- We are integrating continuous user feedback into evaluation frameworks, particularly for client-facing applications.

- We then gather real-world data that informs an iterative-refinement process through post-deployment reviews, A/B testing, and in-context prompts.

- This is something we can continuously monitor: how well the model is adapting over time, how the users are experiencing it, not only how the model scores on isolated tests.

- Our Perplexity Labs team thinks that weaving in human judgment and business priorities when evaluating models can make AI more trustworthy and accountable.

Through the integration of quantitative, qualitative, industry alignment, and user feedback in a single system, Perplexity Labs is creating a best-in-class evaluation model that is future-proof. It’s not just what the AI gets right; it’s what it gets right for your business.

Conclusion

As language models increasingly direct how businesses are run, there’s never been a more important time to scrutinize their performance. Perplexity is still a strong and stable yardstick for fluency and language prediction, but it’s only a tiny part of a much wider picture.

At Perplexity Labs, we try starting with user complexity and then going deeper by folding in contextual checks, industry benchmarks, and the power of the human touch.

Whether we’re developing AI business solutions that optimize workflow or AI marketing solutions that define a brand, our ethos is that every model should operate with clarity, consistency, and intent. Our evaluation approaches are specifically tailored to service modern enterprises’ real-world expectations, where the accuracy, tone, and trustworthiness are as notable as the linguistic fluency itself.

As we head into a world where generative AI in business is a common tool in every industry, the responsibility to scrutinize its output with depth and care only grows. Here at Perplexity Labs, we want to be the ones who set the standard, so that the AI we build doesn’t just sound smart, but is smart, adding real value to our clients’ businesses.

About Us

Tasks Expert offers top-tier virtual assistant services from highly skilled professionals based in India. Our VAs handle a wide range of tasks, from part time personal assistant to specialized services like remote it support services, professional bookkeeping service etc. Furthermore, it helps businesses worldwide streamline operations and boost productivity.

Ready to elevate your business? Book a Call and let Tasks Expert take care of the rest.